SLAM

Localisation in an existing map is great, but what about making a map? A map could be generated from technical or architectural drawings, however, this is likely far more difficult compared to asking the robot to make the map for itself.

Simultaneous Localisation and Mapping (SLAM) has the robot traverse an environment, making a map and localising itself in that map. Much like the cartographers of old, exploring unknown seas and coastlines, map makers had to both figure out where they were, and make a map at the same time.

There are various SLAM solutions available, however, the most accessible solutions utilise lidar and odometry. Odometry for ground vehicles is typically the fusion of dead reckoning (also known as wheel odometry) and an inertial measurement unit (IMU).

Note

UAVs or aquatic vehicles, which can have external forces due to air/water currents, can’t use dead reckoning, furthermore, converting propeller speeds into a twist prediction is also unreliable. The result is that only ground vehicles readily have a reliable estimate for odometry. For robots without reliable odometry (e.g. UAVs), typically an inertial measurement unit (IMU) is fused with visual or lidar data for SLAM. As the robot can move in three dimensions, a 2D map is replaced with a 3D representation specific to the algorthim. Using visual SLAM can be more computationally expensive than lidar scanmatching, which is a potential bottleneck for autonomy on lightweight UAVs.

In this tutorial, SLAM Toolbox will be used, as it is the default offering for Nav2 and is very well maintained. More information can be found in the slides to a ROSCon 2019 talk (or go watch it on Vimeo).

To install SLAM toolbox you need ru following command

sudo apt install ros-<distro>-slam-toolbox

Running the Launch File

The launch file is much more simple than previous localisation, as the two map + localisation nodes are replaced with a single SLAM node. The simulation is started, followed by SLAM, and finally RViz is used to visualise the output. On line 31, a config file for RViz has been passed. This conveniently sets up the topics being visualised as well as any other settings such as the camera angle.

1import os

2from ament_index_python.packages import get_package_share_directory

3from launch import LaunchDescription

4from launch.actions import IncludeLaunchDescription

5from launch_ros.actions import Node, SetParameter

6from launch.launch_description_sources import PythonLaunchDescriptionSource

7

8

9def generate_launch_description():

10 ld = LaunchDescription()

11

12 # Specify the name of the package and path to xacro file in external package

13 pkg_name = 'example_gz_robot'

14

15 # Start simulation

16 launch_sim_world = IncludeLaunchDescription(

17 PythonLaunchDescriptionSource([os.path.join(

18 get_package_share_directory(pkg_name), 'launch'),

19 '/simulation_bringup.launch.py'])

20 )

21

22

23 # Start SLAM Toolbox with default parameters

24 launch_slam_toolbox = IncludeLaunchDescription(

25 PythonLaunchDescriptionSource([os.path.join(

26 get_package_share_directory('slam_toolbox'), 'launch'),

27 '/online_async_launch.py'])

28 )

29

30 # Rviz node

31 rviz_config_file = os.path.join(get_package_share_directory(pkg_name), 'rviz', 'slam.rviz')

32 node_rviz = Node(

33 package='rviz2',

34 namespace='',

35 executable='rviz2',

36 name='rviz2',

37 arguments=['-d', [rviz_config_file]]

38 )

39

40

41 # Add actions to LaunchDescription

42 # Simulation

43 ld.add_action(SetParameter(name='use_sim_time', value=True))

44 ld.add_action(launch_sim_world)

45 # SLAM Toolbox

46 ld.add_action(launch_slam_toolbox)

47 # Visualisation

48 ld.add_action(node_rviz)

49 return ld

Note

Default values have been used for SLAM Toolbox. This includes topics for the laserscan, names of global and odometry frames, along with many tuning parameters. When using the async_slam_toolbox_node directly, these parameters can be overridden with a custom .yaml file, or by passing parameters/reampping topics to the node in the launch file. Explore the online_async_launch.py file to investigate how this can be acheived for your robot.

Run the launch file with,

source ~/<YOUR_ROS_WS>/install/setup.bash

ros2 launch example_gz_robot slam.launch.py

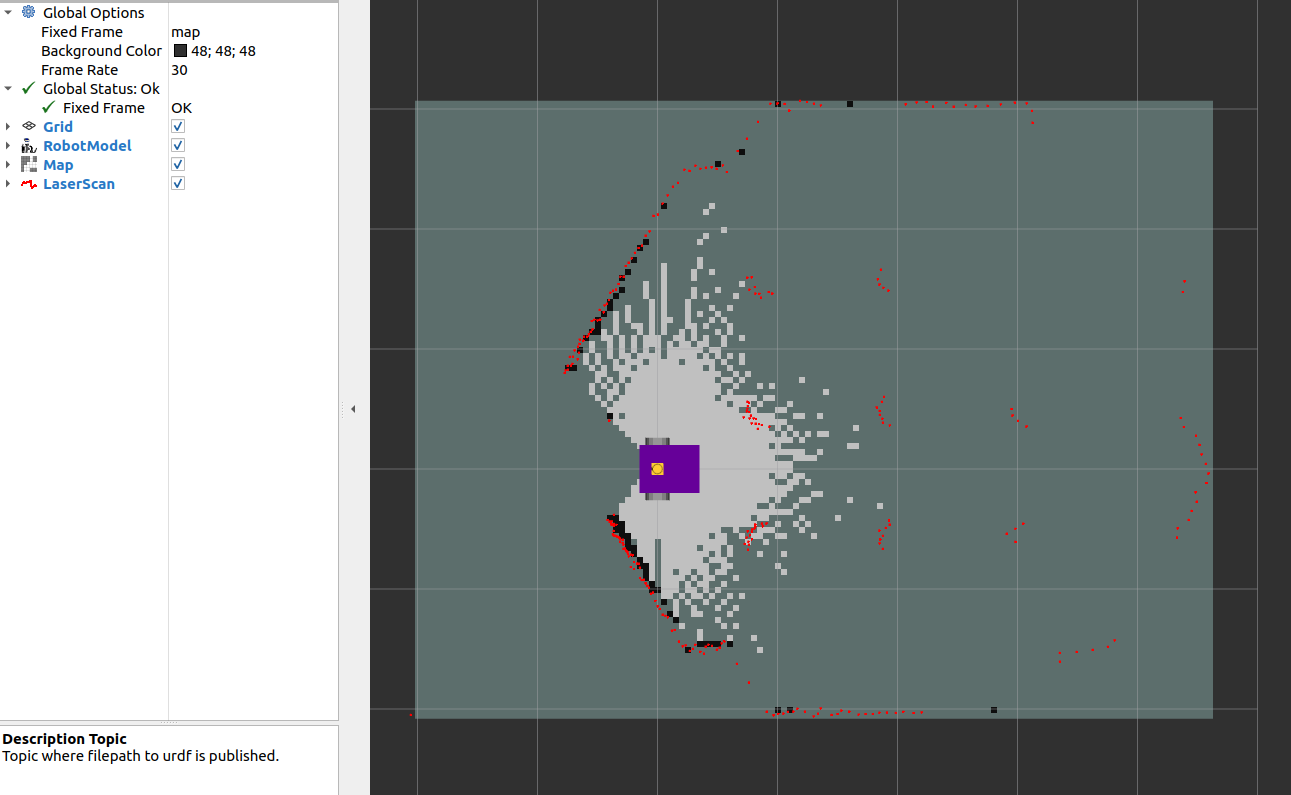

Intially, there is only a small portion of the map which has been generated (based on what the lidar can observe).

Using teleoperation (via teleop_twist_keyboard), move the robot around to fill in the map. The video below shows how SLAM periodically updates and fills in the map based on lidar observations, as well as keeping the pose of the robot well aligned with the world (as witnessed by the strong overlap between the lidar scan and the generated map). However, pure rotations or fast velocities can lead to poor localisation.

Using SLAM Maps

SLAM Toolbox publishes on the /map topic, this can be used for 2D autonomous navigation using Nav2 (for path planning). If you wish to use the map in the future (for localisation), you can save the map using the map_saver node. This can be achieved by calling the service to save the map (example service call at the bottom of the ros index page, with more details on the nav2 wiki). This map image can be used with AMCL using the same approach as the previous localisation exercise.